Also called: Split Testing, Bucket Testing

See also: Multivariate Testing, Run Test Ads

Difficulty: Intermediate

Requires existing audience or product

Evidence strength

Relevant metrics: Unique views, Click through rate, Conversions

Validates: Desirability

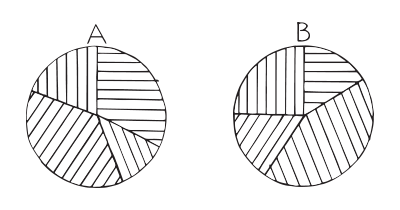

How: Test all variations of the same design simultaneously to determine which performs better as defined by a measurable metric of success. To declare a winning variation, make sure each has received enough exposure in terms of impressions and time for the test winner to be a statistically significant outlier.

Why: By measuring the impact that changes have on your metrics such as sign-ups, downloads, purchases, or whatever your goal might be, you can ensure that every change you implement produces positive results.

This experiment is part of the Validation Patterns printed card deck

A collection of 60 product experiments that will validate your idea in a matter of days, not months. They are regularly used by product builders at companies like Google, Facebook, Dropbox, and Amazon.

Get your deck!Before the experiment

The first thing to do when planning any kind of test or experiment, is to figure out what you want to test. To make critical assumptions explicit, fill out an experiment sheet as you prepare your test. We created a sample sheet for you to get started. Download the Experiment Sheet.

Rationale

A/B testing will allow you to make more out of your existing traffic. While increasing traffic can be costly, increasing your conversion doesn’t have to be.

Discussion

There are a few things to consider running A/B tests.

Only run your experiments as long as necessary

A reliable tests can take time to run. How much time is needed depends on a variety of factors; your conversion rates (a low conversion rate requires more traffic than a high rate) and how much traffic you have got. Typically, testing tools will aid you as to when you have gathered enough data to draw a reliable conclusion. Once you test has been concluded, you will want to turn off the test and go implement your site with the desired varitions.

High impact tests will produce definitive results faster

Of course you can’t know ahead of time wehther you’ll achieve a high impact, but you should have a strong rationale for why a proposed experiment is the best one to run next. The higher the impact, i.e. percentage of change vs the control, the smaller sample size is required. Consider the following example:

| Base conversion rate |

% change vs control |

Required sample size |

Test days required |

|

|---|---|---|---|---|

| Example A | 3% | 5% | 72,300 | 72 |

| Example B | 3% | 10% | 18,500 | 18 |

| Example C | 3% | 30% | 2,250 | 2 |

As your user- or customer base grows, you can afford to experiment in more niche areas at once or shift toward a higher volume of tests of smaller changes that later in the game can create big wins.

After the experiment

To make sure you move forward, it is a good idea to systematically record your the insights you learned and what actions or decisions follow. We created a sample Learning Sheet, that will help you capture insights in the process of turning your product ideas successful. Download the Learning Sheet.

Popular tools

The tools below will help you with the A/B Testing play.

-

Optimizely

Enterprise A/B testing tool including personalisation and recommendation modules for more advanced optimisation

-

Visual Website Optimizer (VWO)

Established A/B testing suite including testing & experimentation, research & user feedback, analytics & reporting, and targeting & personalization..

-

A/B Tasty

A reasonably priced and simple to use tool that serves as a good starting point for companies just starting in conversion optimization.

-

Adobe Target

Similar features to other enterprise testing tools such as Optimizely, including personalisation and recommendation modules for more advanced optimisation

Examples

2012 Obama Campaign

Through A/B testing, the 2012 Obama campaign found that splitting the donation page up into 4 chunks increased the conversion rate by 49%.

Source: The Secret Behind Obama’s 2012 Election Success: A/B Testing

Eletronic Arts - SimCity

For one of EA’s most popular games, SimCity 5, a promotional banner for pre-ordering did not have its desired effect. Removing the promotional offer alltogether in an A/B test, giving room for call outs for buying the game without any discounts, drove 43.4% more purchases.

Source: 3 Real-Life Examples of Incredibly Successful A/B Tests

Related plays

- Universal Methods of Design by Bruce Hannington & Bella Martin

- Hacking Growth by Sean Ellis & Morgan Brown

- The Real Startup book by Tristan Kromer, et. al.