Concierge vs. Wizard of Oz Experiments

A side-by-side comparison.

Both Concierge and Wizard of Oz experiments are lean prototyping methods where you manually deliver a product’s value proposition without building full technology.

In other words, you “do the hard work” behind the scenes to test an idea before automating it. These approaches let startups validate assumptions quickly and cheaply, but they are often confused with one another.

The key distinction is that a Wizard of Oz test hides the human effort from the user, whereas a Concierge test highlights personal service. This difference has cascading effects on user experience, learning outcomes, costs, and when each method is most useful. Below, we compare Concierge vs. Wizard of Oz across several dimensions, with real examples to illustrate each point.

Fake it till you make it

User awareness and experience

In a Wizard of Oz prototype, users do not know a human is behind the scenes. In a Concierge test, they interact openly with a human provider.

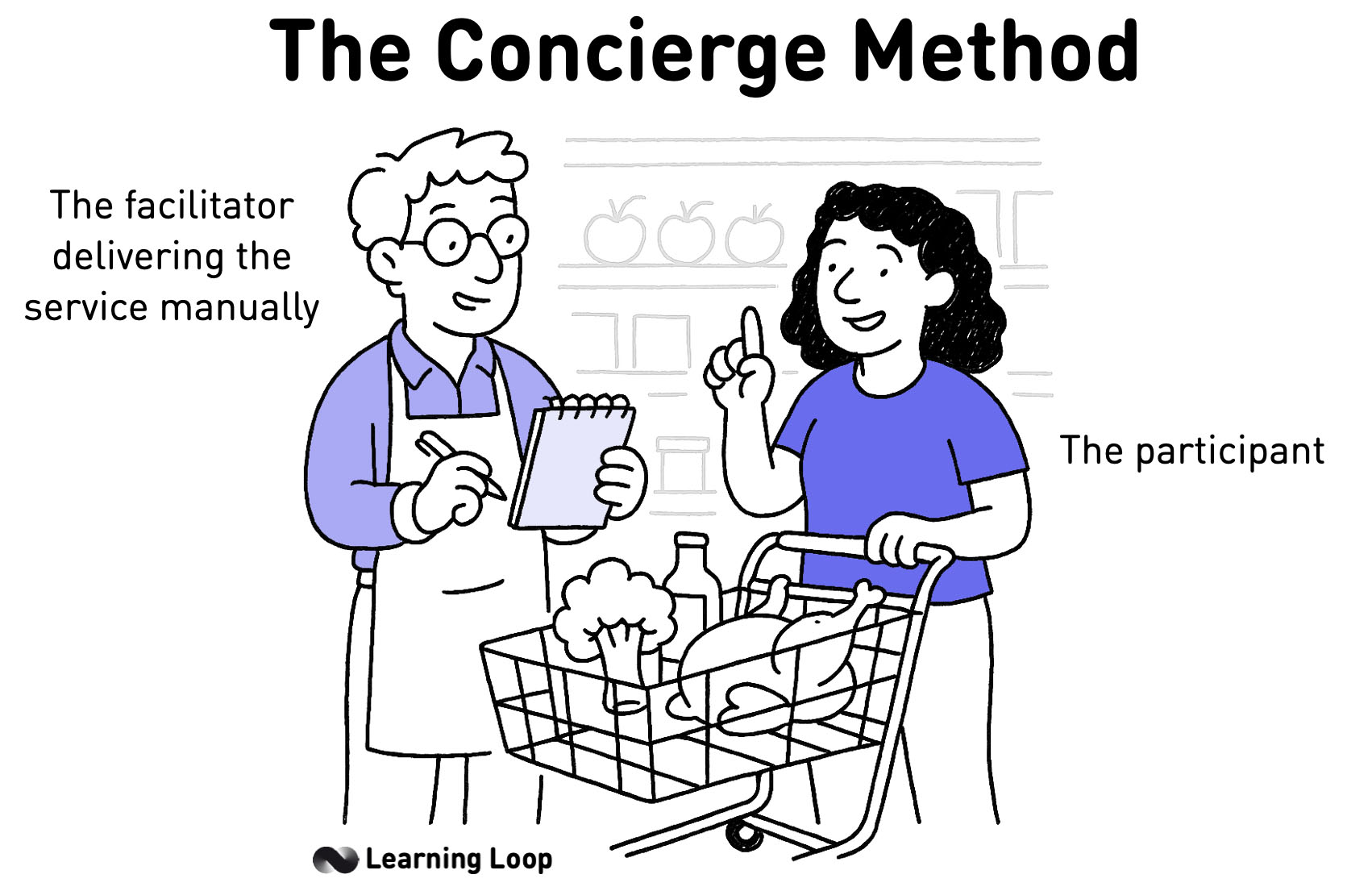

Concierge experiments

The user is fully aware that a human is involved in delivering the service. In fact, this upfront transparency is part of the experience – much like a hotel concierge personally attending to a guest. Because customers know a real person is helping them, a Concierge MVP often provides extra touch and care beyond what an eventual automated product could. For example, early Wealthfront provided financial advice by having team members sit down with customers, pen and paper in hand, to craft personalized portfolio plans. Similarly, Food on the Table’s CEO manually went grocery shopping with users to plan their meals. In these cases, users understood they were receiving a manual, high-touch service – which often delighted them and delivered more than the bare-bones product would. This human contact can itself be a value proposition, creating a rich experience that engages customers (as long as the context is appropriate – for instance, shoppers loved Food on the Table’s personal touch, whereas a surprise human intervention on a private WebMD search might feel intrusive).

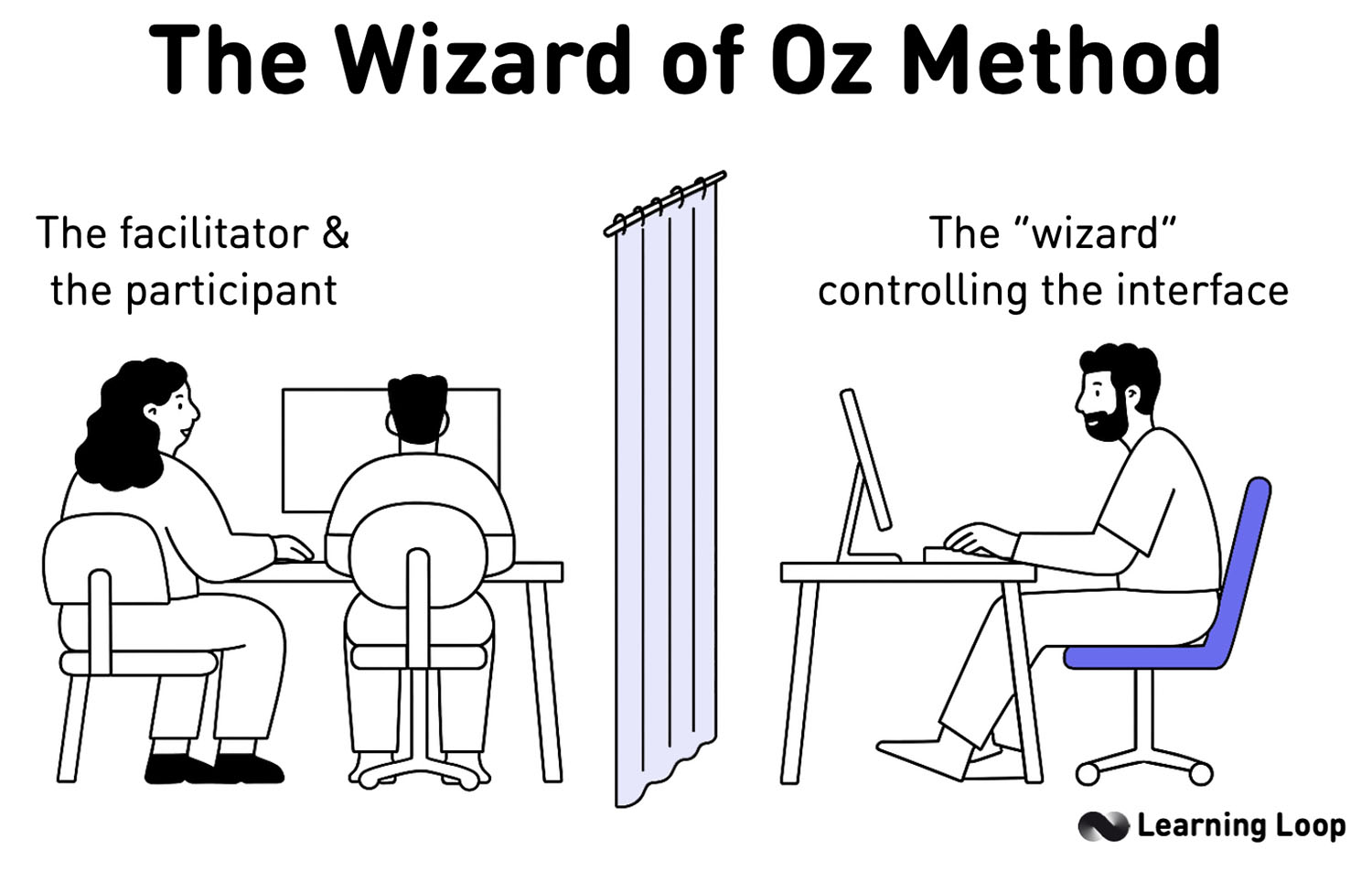

Wizard of Oz experiments

The user believes they are interacting with a fully functional, automated product. All the manual work happens behind a curtain (figuratively and, as in the classic metaphor, sometimes literally with a “wizard” hidden out of sight). From the user’s perspective, the experience should feel like using a normal app or service – nothing more, nothing less. For example, users of the Q&A startup Aardvark simply typed questions and got answers as if an algorithm handled it, while in reality interns were manually routing questions to experts via instant messaging. CardMunch users thought the app magically digitized business cards, but behind the scenes humans on Amazon Mechanical Turk transcribed the cards and the app just returned the results. The goal is for the Wizard of Oz prototype to deliver the promised value without tipping off the user that it’s manual. At most, users might notice slight delays or imperfect efficiency (since a human isn’t as fast as software), but as long as the core value is delivered, they experience the product as if it were real. In short, Wizard of Oz hides the human; Concierge flaunts the human – a fundamental difference in user awareness that shapes all other aspects of these experiments.

Interaction style and service delivery

Concierge: Because a human operator is front-and-center, the interaction is typically personal, high-touch, and flexible.

The concierge can talk directly with the customer, ask questions, and tailor the service on the fly. This often means meeting or communicating one-on-one (in person, by phone, or via a personal email/chat) to understand the user’s needs and deliver a custom solution.

For instance, in a concierge test for a meal-planning service, the founder might personally interview a user about their dietary preferences and then hand-craft a meal plan, adjusting based on the user’s feedback in real time. This hands-on approach lets you deliver near-“white glove” service, adapting to each customer. Every customer might receive a slightly different, optimized experience, which helps you learn what really delights them. The downside is that this interaction doesn’t mimic a self-service product – it’s deliberately better (more supportive) than the eventual product will be. You’re essentially testing whether solving the problem in a manual, unscalable way creates value for the customer. If customers aren’t thrilled even when you bend over backwards to help them, a scalable product version is unlikely to impress them either.

Wizard of Oz: The interaction style is through a fake automated interface – the user clicks buttons, enters information, or uses a UI just as they would in a finished product.

The difference is that behind that interface, you (the team) are secretly performing the tasks. There is no direct human-to-human interaction from the user’s point of view; you make it feel like the software or system itself is responding.

For example, Zappos famously tested whether people would buy shoes online by setting up a simple e-commerce website – when an order came in, CEO Nick Swinmurn would run to a store, buy that exact pair of shoes, and ship it to the customer. To the buyer, it felt like a seamless online purchase from a store with a large inventory, even though every order was fulfilled manually in an ad-hoc way. This approach demands that you design a credible user interface or process so the experience is realistic. You usually have to limit the scope of what the user can do so that your manual work stays manageable (for instance, Aardvark’s team only routed questions that their staff could handle, and CardMunch might have limited how quickly cards were processed). The Wizard of Oz interaction is about simulation – letting users use a prototype as if it were real, so you can observe their natural behavior and reactions without intervening or guiding them directly.

Setup and implementation effort

Concierge setup: Running a concierge experiment is typically straightforward in terms of technology – you often don’t need to build any product interface at all. Instead, the effort goes into the manual work and logistics of delivering the service. Setting up a concierge test might be as simple as recruiting a few target users and scheduling time to work with them personally. In some cases, it’s literally done with pen and paper, spreadsheets, or off-the-shelf tools. For example, to test a personal shopping service, you might advertise the service and then personally take each customer through a shopping trip or purchase items for them individually. Wealthfront’s concierge MVP didn’t involve coding an app; it involved certified financial advisors spending hours with each client to devise an investment strategy.

For a Concierge test, you often don’t need to build any product interface at all

Because there’s no software “wrapper”, the setup is more about your personal capacity and any materials you need to serve the customer. However, organizing a concierge test can be time-intensive: you need to plan how you’ll manually handle requests, coordinate with users one-on-one, and possibly train any team members who will act as “concierges.”

Essentially, you’re doing things that don’t scale in order to validate the solution. Paul Graham famously advised startups to “do things that don’t scale” in early stages, and the Concierge method is the epitome of that – accept the manual effort as a temporary cost to learn what users want.

Wizard of Oz setup: This approach usually requires creating a fake front-end or prototype that users will interact with. While you don’t build the full back-end or automation, you may need to develop a simple website, app mockup, or interactive demo to serve as the facade. The quality of this front-end should be just high enough that users believe it’s a working product. For instance, the team behind the home cleaning startup “Get Maid” built a very skinny mobile app: users could tap a button to request a cleaning, which merely sent an alert to the founders, who would then manually arrange a cleaner and confirmation back to the user.

Setting up a Wizard of Oz test often involves scripting the workflow

Setting up a Wizard of Oz test often involves scripting the workflow so that when a user takes an action, the team knows how to respond behind the scenes. It can be more technically involved upfront – you might wireframe screens or write some throwaway code – but you deliberately skip any complex algorithms. Instead, you plan for humans to carry out those complex tasks.

A classic example from IBM in the 1970s involved a fake computer system for speech-to-text: users spoke to a computer, which seemed to transcribe their speech, but really a person in the next room was typing out what they said. In terms of implementation, you need to ensure the human “wizards” have the tools and information to do their job quickly (maybe a dashboard to see user inputs, a chat interface to send outputs, etc.). Overall, a Wizard of Oz test can be resource-intensive to run, especially if you have many users or complex tasks to simulate – sometimes requiring multiple people acting as wizards in shifts. The trade-off is that this effort is still far less than building the full product, and it’s temporary until you validate the concept.

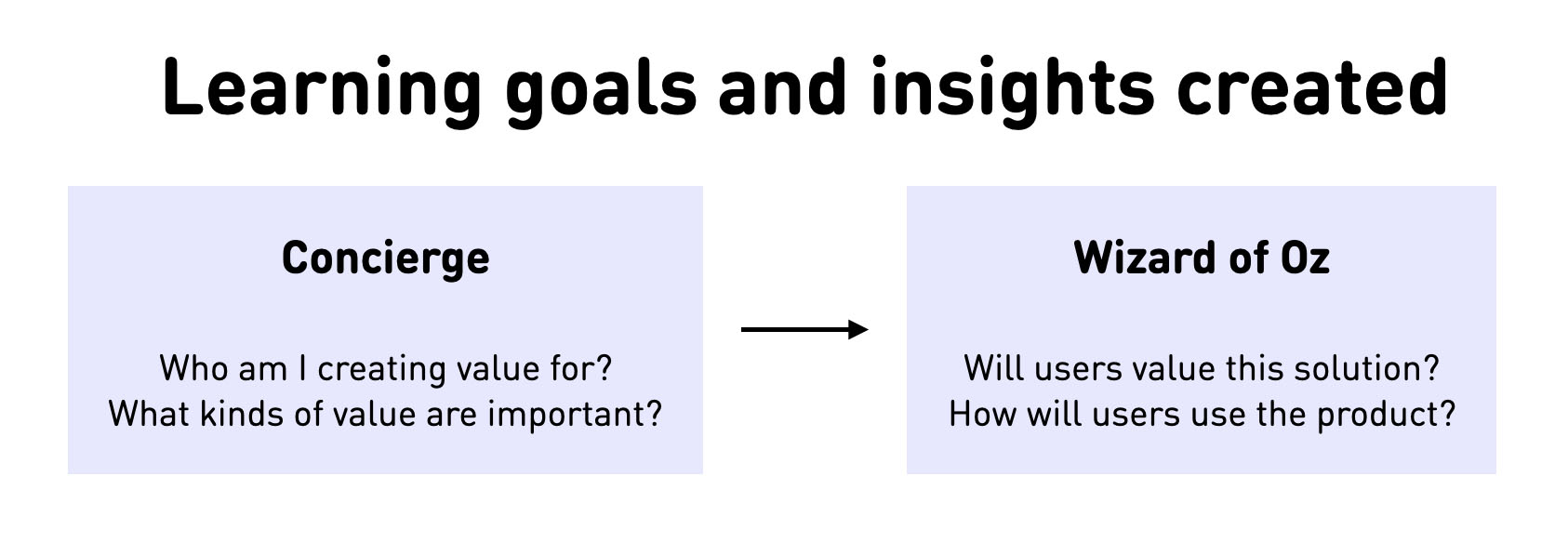

Learning goals and insights

Concierge: This method shines when your goal is exploratory learning – discovering how to solve a problem and what users really need, before you’ve nailed down a specific solution. Because you’re directly engaging with customers, you gather rich qualitative feedback and can iterate on the service in real time.

A concierge test helps answer questions like

- “Does this solution truly solve the user’s problem?”

- “Which features or steps are really necessary to delight the customer?”.

It’s considered a generative experiment: you may start with only a vague idea of the solution and, through manual delivery, generate new ideas and insights about what an optimal solution could be. For example, when Wealthfront first ran concierge-style trials, they didn’t have a strict algorithm or product flow in mind – by working closely with users, they learned how to present portfolio information and what guidance customers needed, which informed the design of their eventual automated service.

The human touch does introduce bias in results; users might give positive feedback because of the personal service or extra hand-holding. Therefore, a concierge experiment is not ideal for validating a very specific feature or solution hypothesis (it can easily yield false positives/negatives since the experience is overly optimized by human help). Instead, it’s meant to help you converge toward a solution hypothesis. Use concierge testing when you need to learn what the solution might look like or why users behave a certain way, not to rigorously prove a pre-defined solution works.

Wizard of Oz: This method is usually used as an evaluative experiment to test a clear hypothesis about a solution’s viability or user behavior. Because users interact with what appears to be a real product, you can gather quantitative data and realistic user reactions to specific features or flows.

A Wizard of Oz test helps answer:

- “Will users actually use this service as intended if we build it?”

- “Which features are essential or which choices do users make when using the product?”

For instance, Zappos’s experiment answered whether customers would buy shoes online without trying them on – a critical viability assumption. Wizard of Oz prototypes allow you to validate or falsify a solution hypothesis before investing in automation. If users don’t engage with the fake-but-functional prototype, that’s a strong signal the concept might not work, regardless of how much tech you could throw at it. This approach yields more unbiased feedback on the solutio* than concierge, since users aren’t being coddled by a person and will react to inconveniences or UI issues honestly.

You’ll mostly collect usage data and observe behavior: for example, which menu options people click, how long they wait, whether they come back, etc., as well as any qualitative feedback if you debrief users afterwards. The insights from a Wizard experiment tend to be focused on tuning or validating the product concept (e.g. confirming demand, identifying which features matter most), rather than generating new solution ideas from scratch. In Lean Startup terms, Wizard of Oz is an evaluative product experiment (testing if your proposed solution delivers value), whereas Concierge is a generative method (learning what a solution should be).

Cost and scalability

Concierge cost: By design, concierge MVPs do not scale. You’re expending manual effort for each customer, so the cost (in time, and often money) grows linearly with every additional user. It’s not uncommon that a concierge test feels like a full-on consulting project – for a complex B2B solution, a team might spend weeks or months working hands-on with a single client to deliver the promised value. This is usually not cost-effective if you tried to keep doing it long-term. However, in the short term you accept these costs as the price of learning. The heavy involvement can even allow you to charge customers during the test (since they’re getting a premium service).

Some startups offset concierge costs by charging a fee or subscription for the manual service – which not only tests willingness to pay but also helps fund the experiment (e.g. Food on the Table’s manual meal-planning service charged $10/month even when it was just the founder doing the work). Still, you must carefully limit the scope: run concierge tests with a small number of users that you can manage personally. Once you’ve learned what you need (or hit a point where each new manual customer isn’t revealing new insights), it’s time to stop and automate the most valuable pieces. In summary, Concierge MVPs trade scalability and efficiency for rich learning – they intentionally sacrifice automation to make sure you deeply understand your customer’s needs before scaling.

Wizard of Oz cost: A Wizard of Oz prototype is also labor-intensive, but its costs often come from needing some initial build and then maintaining the “wizard” operation behind the scenes. You might need to pay human workers (or reassign team members) to act as the hidden engine powering the product. For example, CardMunch had to pay people (via Mechanical Turk) per business card transcribed – an ongoing variable cost – but this was likely far cheaper and faster than developing advanced OCR software up front. Likewise, if you require experts on standby (like Aardvark’s interns or IBM’s typists), you must budget their time.

The advantage in cost is that you only expend resources on tasks users actually want done, avoiding waste on features nobody uses. If no one ends up using a feature, you’ve saved the engineering cost by faking it instead of building it. In terms of scalability, Wizard of Oz tests are meant to be temporary as well, but they can sometimes handle a moderate volume of users because the user-facing side is software. You can potentially serve many users if you have enough humans behind the curtain (for example, several wizards could simultaneously handle requests coming through a chatbot).

It’s still not truly scalable to thousands of users, but it’s more scalable than a concierge approach where each user expects dedicated attention. Think of Wizard of Oz as incrementally scalable: you might start by handling 5–10 users’ requests manually. If demand grows, you can progressively automate parts of the system (the “swap in automation module-by-module” approach) – for instance, automating easy tasks and keeping humans for the harder ones, until eventually little or no manual work is needed. Overall, both methods are intentionally unscalable early on, but Wizard of Oz is usually closer to a path of automation because the interface is already in place and you’re primarily swapping out your hidden humans for code when ready.

When to use each method

A Concierge MVP involves direct, customized service to a few users – ideal when you need to learn _what solution to build. In contrast, a Wizard of Oz prototype simulates a finished product – ideal when you want to test whether a known solution works._

Concierge tests are best suited for early discovery and concept exploration. Use a concierge experiment when you need direct contact with users to uncover their pain points, refine your value proposition, or figure out the key features needed to solve their problem. It’s especially useful for complex, high-touch offerings (like B2B services or anything requiring expert knowledge) where delivering the service manually can validate whether customers find it valuable at all before you invest in automation.

If you’re unsure which part of the solution or user journey matters most, the concierge approach lets you try different tactics on the fly and observe user reactions. For example, a startup targeting a new healthcare solution might start by manually consulting patients and coordinating care as a service; this could reveal which aspects of the service have the biggest impact on patient satisfaction. In short, choose Concierge when you have more unknowns than knowns about the solution – it’s a tool for generating hypotheses and shaping the product vision through close customer interaction. Just remember not to treat concierge results as a strict proof of your solution’s scalability or market size (since you might be over-delivering service); use it to learn and iterate, then move to more scalable tests.

Wizard of Oz tests are ideal when you have a clearer hypothesis or product concept and want to validate specific aspects of the solution or user behavior. This could be in mid-stage product development, after you’ve identified a solution that might work and need evidence that users will embrace it. It’s often used for consumer apps and interfaces where you need to see how users navigate a UI or whether an automated service would meet their expectations. For instance, if you believe an AI-powered chatbot could solve customer support questions, you might implement a Wizard of Oz where a human agent responds behind a fake chatbot interface – testing if users are happy with the responses and if they actually use the service as expected.

Use Wizard of Oz to evaluate feasibility and desirability of a solution in a way that’s as close to reality as possible (minus the code). It is a great follow-up to a concierge test: once you have a hypothesis for a repeatable solution, you hide the human and see if the concept still flies. In lean terms, the Wizard of Oz method is for validating a solution hypothesis, while the Concierge method is for generating solution ideas and uncovering user needs. If you confuse the two or use the wrong method at the wrong time, you risk faulty signals – for example, trying to validate a precise metric with a concierge test could mislead you, and conversely, using a Wizard experiment too early might lock you into a suboptimal concept.

Both Concierge and Wizard of Oz experiments are powerful tools in a startup’s arsenal for de-risking ideas without writing heavy code. They complement each other: you might start with concierge tests to discover what your product should do, then transition to Wizard of Oz tests to validate that the product, when presented as a faux-automated system, actually delivers value to users. Always remember the core difference – “Wizard of Oz hides the human; Concierge flaunts the human.” Use that as a guiding principle when designing your experiments. By leveraging each method at the right time, you can learn rapidly and iterate toward a product that customers truly want, all while conserving development resources.

Wizard of Oz is for evaluating a known solution hypothesis, and Concierge is for learning and shaping what the solution should be. Keep the distinction clear, and you’ll avoid flawed assumptions and build a stronger business model grounded in reality.

Comparison chat: What are the main differences between a concierge experiment and a Wizard of Oz experiment?

Finally, let’s compare the two methods directly. They both involve manual work and aim to learn cheaply, but they differ in how the experiment is set up, when to use which, and what you can read from the results. Below is a side-by-side summary of the key differences:

| Aspect | Concierge MVP (Human-Powered Service) | Wizard of Oz MVP (Hidden Human Automation) |

|---|---|---|

| User interaction | High-touch, personal – You or your team interact with the user openly, like a personal concierge. Users know a human is involved. | Behind-the-curtain – A human (or team) performs tasks secretly, while the user interacts with what looks like a normal app or interface. Users assume it’s an automated system. |

| Setup required | Minimal tech – Can often be done with no or very light software (even just email/phone or a simple landing page). The focus is on manually delivering the service in person or one-on-one. | Frontend facade needed – Requires a believable user interface or prototype to simulate the product. You must build just enough UI to make it seem real, and have people ready to operate behind it. |

| Primary use case | Exploration & discovery – Great when you’re not exactly sure what the solution should be. It helps generate ideas and understand user needs through close interaction. Use this to learn what to build (problem-solution fit). | Validation & testing – Best when you have a specific solution or feature in mind and want to test if it truly delivers value to customers. Use this to test if your solution works for the user (solution-market fit). |

| Adaptability during test | Very flexible – You can tweak the service on the fly for each user. If the user asks for something, you can say “sure, let me do that” and see where it leads. This allows real-time pivots and follow-up questions. | Fixed experience – The user sees a set interface/workflow. You typically don’t change the product mid-test for each user. You collect data on how they use the given experience. (You can still iterate between sessions, but not during a session.) |

| Data and feedback | Rich qualitative feedback – Direct conversations yield insights into why the customer likes or dislikes things. You’ll gather anecdotes, observe body language, and hear suggestions first-hand. Metrics are less about volume and more about depth of learning. | Behavioral and quantitative data – You observe what users do when using the prototype. You might track conversion rates, usage frequency, drop-off points, etc.. You can still do user interviews after, but during the test you mostly get logs and metrics. |

| Signal quality | Potentially inflated – Because you’re delivering a “white-glove” service, users may be extra delighted. Positive responses could be skewed by the human touch (a known false-positive bias where customers love the service but might not love a self-service product as much). Also, because sample sizes are small, your evidence is qualitative. | More realistic – Users react to the product as if it’s real, so interest and usage are genuine indicators of demand. You’re more likely to get an accurate read on whether the solution itself (without the magic human boost) works for them. However, ensure that any shortcomings (e.g., slower response) are clearly temporary, so you don’t introduce a false negative by frustrating users. |

| Resource cost | Your time and effort – Potentially very time-consuming per user, since you’re doing everything manually. Not scalable beyond a handful of customers; you might spend hours per customer or run a long-term pilot for a few clients. | Operational complexity – Requires managing the “wizard” operations. Could involve multiple people behind the scenes for many users. Building the fake front-end also takes effort. It’s easier to scale to more users than concierge (since the interface can handle many, if you have enough wizards), but each additional user adds workload on the human operators. |

| Examples | Food on the Table – manual personal shopping for each customer; Wealthfront – founders personally curated investment advice; Get Maid – founders manually matching cleaners via text; any scenario where a founder is personally delivering the value to test the concept. | Zappos – website front-end, with founder manually fulfilling orders; Aardvark – IM service with staff manually routing Q\&A pairs; Buffer – landing page front-end, with manual posting to social accounts; IBM Voice Demo – fake software with hidden typist. Users in each case were unaware of the human involvement. |

The Concierge MVP and Wizard of Oz methods serve different innovation stages and goals. The concierge approach is about learning by deeply engaging with a few customers, whereas Wizard of Oz is about testing your solution’s viability at a larger scale without full automation. Both can be extremely valuable when used at the right time.

Frequently Asked Questions

What is the difference between a Concierge MVP and a Wizard of Oz MVP?

The key difference is user awareness. In a Concierge MVP, the customer knows a human is manually delivering the service. In a Wizard of Oz MVP, the manual effort is hidden behind a facade—users believe the product is functioning automatically. Concierge is ideal for early exploration and deep learning; Wizard of Oz is better for validating a defined solution.

When should I use a Concierge MVP instead of a Wizard of Oz test?

Use a Concierge MVP when you’re still exploring what your solution should be. It allows you to interact directly with customers, tailor the experience, and discover their real needs. Use a Wizard of Oz test when you want to validate if a specific feature or product design works, simulating a finished experience without building the backend.

Is Wizard of Oz prototyping a good way to test product-market fit?

Yes, it can be. Wizard of Oz tests simulate a real product, so users behave as if it were fully functional. This makes it effective for observing real usage patterns, measuring interest, and testing key assumptions. However, it’s best used after you’ve identified a viable solution—not in the earliest discovery phase.

How do I run a Concierge MVP experiment step-by-step?

- Identify the core value you want to test.

- Design a manual way to deliver that value to a few users.

- Engage directly with each customer.

- Observe their reactions and ask questions.

- Iterate the service as you learn.

- Document insights and look for patterns before scaling or automating.

What are real-world examples of Wizard of Oz experiments?

Examples include:

- Zappos: Took online orders, but fulfilled them manually by buying shoes from local stores.

- CardMunch: Let users scan business cards, but used humans to transcribe them behind the scenes.

- Aardvark: Routed user questions manually via chat while simulating an algorithm.

How do Wizard of Oz tests validate startup ideas?

They help you test a solution hypothesis by simulating a real product experience. If users engage with the fake interface, request features, or even pay, it’s a strong sign that the solution has demand—before you invest in development. You can also test usability and behavior metrics under realistic conditions.

Why do startups use Concierge MVPs before building a product?

Because they let you learn deeply and quickly without building anything. Startups use Concierge MVPs to talk directly with users, tailor experiences, and discover the pain points and preferences that should shape the product. It’s a low-risk way to validate if a problem is worth solving.

Can I charge users during a Concierge MVP test?

Yes, and it’s often recommended. Charging helps validate willingness to pay, which is a key part of product-market fit. Even a small fee indicates whether customers see enough value in your manually delivered service. Just be transparent about the experience.

What tools do I need for a Wizard of Oz prototype?

At minimum, you’ll need:

- A basic user interface (mock website, app, or form).

- A way to receive user inputs (e.g., Google Forms, Typeform, or a fake dashboard).

- A “wizard” backend—you or your team manually fulfill requests based on what users do.

- Optionally, a lightweight automation layer (e.g., Zapier or Airtable) to manage inputs and responses.

How do I explain the difference between Concierge and Wizard of Oz tests to my team?

Try this:

“A Concierge MVP is like us personally walking a customer through the product—full service, high touch, no automation. A Wizard of Oz test is like watching a user interact with our product as if it were real, while we secretly power it from behind the curtain.”

That simple framing helps clarify intent and sets expectations for implementation and learning goals.

- Concierge MVP Experiment by Anders Toxboe at Learning Loop

- Wizard of Oz Experiment by Anders Toxboe at Learning Loop

- Concierge vs. Wizard of Oz Test – what's the difference? by Tristan Kromer at Kromatic

- The Real Startup Book by Tristan Kromer et al. at The Real Startup Book

- Concierge vs. Wizard of Oz MVP by LogRocket Team at LogRocket

- Confusion between a Wizard of Oz and Concierge experiment by Shi Wah Tse at Medium