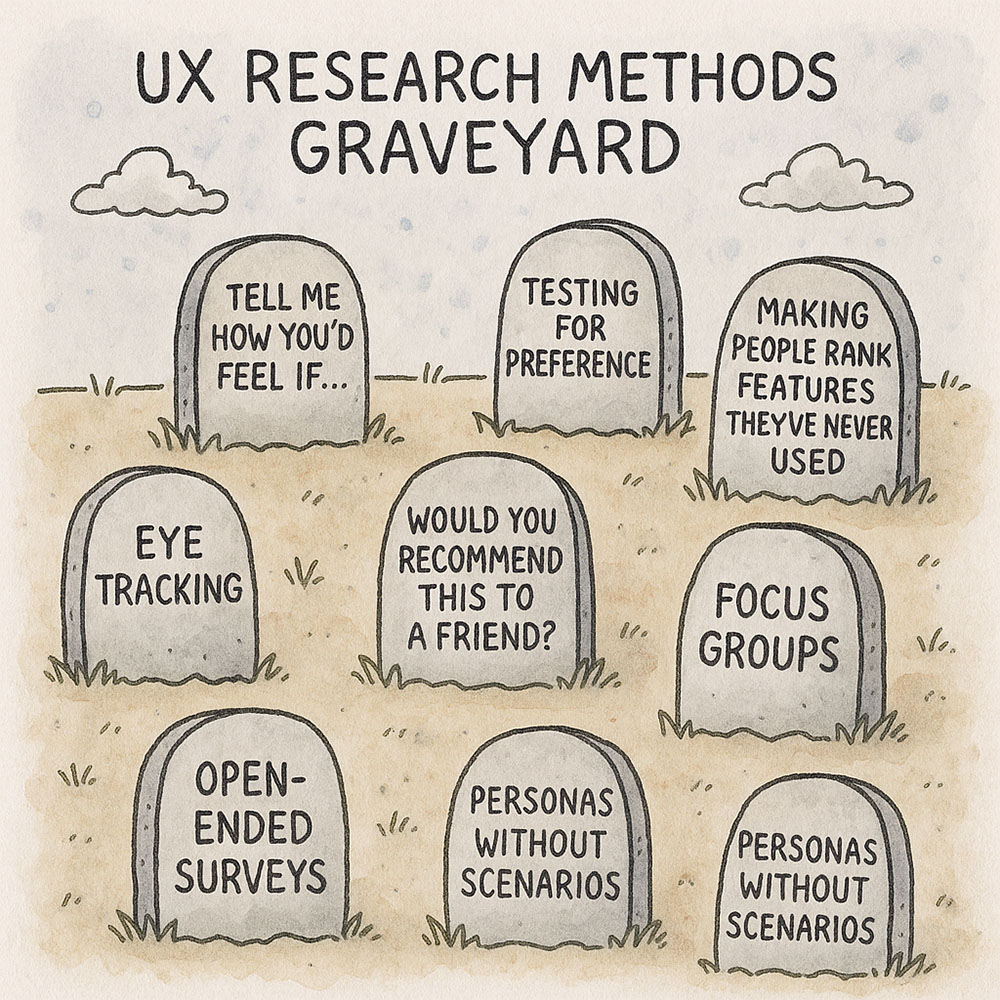

Keep away from these 8 bad UX research practices

And what to do instead

Find real user problems with the Discovery Patterns card deck

Use proven techniques to gather insights and validate ideas before you build.

Get your deck!UX research evolves fast, yet many teams still rely on outdated techniques that no longer produce useful insights.

User research methods that belong in the graveyard

To help you avoid false signals and wasted effort, we’ll walk through eight methods that often mislead—and explain what to do instead. These aren’t just critiques. They’re practical, proven ways to shift your research from guesswork to grounded insight.

This post was inspired by Nikki Anderson’s recent LinkedIn post.

Strong product discovery means deeply understanding customer problems, validating potential solutions through observable behavior, and testing assumptions before scaling. Effective teams build evidence gradually—through small, fast iterations—rather than assuming clarity upfront.

1. Hypothetical Questions: “How would you feel if…”

Let’s start with a common interview trap: asking users to imagine how they’d feel about a hypothetical feature. These questions seem engaging but rarely yield trustworthy data.

Why it fails: People are bad at predicting future behavior. They try to help by giving an answer, but it’s a guess, not evidence.

Try instead: Ground your research in real experience. Ask about past behavior: “Tell me about the last time you tried to solve this problem.” For new ideas, observe users in context with Contextual Inquiry or run Customer Interviews to surface actual needs. Better yet, give users a prototype and watch how they interact with it. Actions are more reliable than opinions.

2. Preference Testing Without Context

Once you know not to ask users to guess the future, it’s tempting to instead ask which design they prefer. But preference testing alone rarely gives actionable insight.

Why it fails: People might prefer the prettier layout, but struggle to use it. You learn what they like, not what works.

Try instead: Run usability tests with realistic tasks. Observe what helps users succeed. Five-Second Tests or First Click Testing show where attention goes and whether the design supports user goals. Discovery should be grounded in behavioral evidence, not stated opinions.

3. Misusing NPS on Prototypes

From preferences, we often shift to opinions about loyalty—specifically, Net Promoter Score (NPS). But using NPS on early-stage concepts or prototypes can send the wrong signal.

Why it fails: NPS is built for established products. On a prototype, it’s too early for users to meaningfully rate advocacy.

Try instead: Ask users to complete a relevant task, then measure what they actually do. If you want to assess potential impact, follow up with behavioral metrics or engagement indicators, not just declarative feedback. Good discovery validates desirability through interaction—not through opinions about a product that doesn’t yet exist.

4. Ranking Features Users Haven’t Tried

Like NPS, feature ranking surveys are popular—but flawed when based on assumptions. Asking users to prioritize features they’ve never seen often leads to misinformed decisions.

Why it fails: Without firsthand experience, users rely on labels, not value. The rankings reflect guesswork.

Try instead: Use Kano Analysis to assess what features delight or frustrate. Test with Fake Doors or Feature Stubs to see what users actually click on or try to use. If a feature matters, users will demonstrate interest. Let them act, not just tell.

5. Overusing Eye Tracking

At this point, you might think about investing in eye-tracking tech. It looks impressive—but often tells you less than you think.

Why it fails: Heatmaps show attention, not comprehension. You may learn where users look, but not what they understand or why.

Try instead: Watch users complete tasks. Tools like Hotjar offer scrollmaps and clickmaps with less overhead. Use a 5-Second Test or a Pre/Post Test to see what users remember or how easily they find what they need. These methods provide actionable feedback based on behavior.

6. Focus Groups

Group feedback can seem efficient—but also introduces bias. Next time you consider a focus group, ask what you’re really hoping to learn.

Why it fails: One person dominates. Others hold back. You get groupthink, not deep insight.

Try instead: Choose 1:1 Customer Interviews or Usability Tests where participants show you how they think through action. The best insights come from what users do, not what they claim in a group setting.

7. Open-Ended Surveys

Even with individual input, open-ended survey questions can underdeliver. Without clear structure, users’ responses may not translate into useful data.

Why it fails: You get scattered anecdotes. Too vague to analyze. Too inconsistent to act on.

Try instead: Use Micro Surveys embedded in the product at key touchpoints. Focus on behaviors: “What stopped you from completing this step?” or “What made you try this feature?” These responses are more anchored in action, making them more reliable for design decisions.

8. Personas Without Context

You’ve gathered insights, and now it’s time to build personas. But if they’re just demographics and quotes, they won’t help design anything meaningful.

Why it fails: A persona without scenarios becomes a caricature. It doesn’t guide decisions—it decorates slides.

Try instead: Use Prototype Personas grounded in specific goals and behaviors. Pair them with Scenario Mapping or Job Stories that reflect how people actually interact with your product. Personas should represent behavioral archetypes—not just marketing segments.

Retire Weak Methods, Embrace Real Validation

Old habits linger. These outdated research methods keep showing up because they’re familiar, fast, and easy to explain. But they often lead to weak insights and costly missteps.

Strong discovery practice means identifying critical assumptions, testing them quickly, and interpreting results with humility. It requires a shift from asking users what they want to watching how they behave—and being ready to change course when the data says so.

Explore the Validation Patterns to discover methods that give you confidence, not just data.

The best research doesn’t ask what users think—it observes what they do.

- LinkedIn post: Some research practices age like wine by Nikki Anderson