Leadership, Product management, User experience

Dark Patterns

Design patterns that manipulate users through deceptive design.

Also called: Deceptive Patterns

See also: Dark Reality, Humanize Design Goals, Self-Determination Theory

Relevant metrics: User Satisfaction Scores, Customer Support Tickets and Complaints, Churn Rate, Opt-Out vs. Opt-In Rates, User Feedback and Reviews, Customer Lifetime Value (CLV), and Cancellation and Unsubscription Rates

Dark patterns are deceptive design techniques used in user interfaces to manipulate users into actions they might not have intended, often benefiting the business at the user’s expense.

These actions can range from making unintended purchases, subscribing to services without clear consent, sharing personal information unknowingly, or finding it difficult to cancel subscriptions. The designs exploit cognitive biases and psychological principles—such as default bias, scarcity heuristic, and social proof—to steer users toward decisions that favor the business over the user’s best interests.

By leveraging these cognitive biases, designers can subtly influence user behavior. Examples of such patterns are found in plenty in the Persuasive Patterns Playbook on this site. Examples are:

- Default Bias. Users tend to stick with default options, so setting defaults that favor the business can lead to unintended user consent.

- Scarcity Bias. Creating a perception of limited availability can pressure users into making quick decisions without thorough consideration.

- Social Proof. Displaying information about others’ actions can persuade users to follow suit, even if it’s not in their best interest.

Unlike ethical design practices that prioritize user experience and transparency, dark patterns intentionally create confusion, obscure important information, or present misleading options. Designers might combine multiple dark patterns in tandem, dramatically increasing their effectiveness. This layering can profoundly manipulate consumer decisions, often without users realizing the extent to which they are being influenced. Detection of these manipulative tactics is challenging for users because they are seamlessly integrated into the interface, making them difficult to spot.

Key Takeaways

- Understanding dark patterns and their exploitation of cognitive biases is crucial for creating ethical designs that respect users and build trust.

- Combining multiple dark patterns can dramatically increase their manipulative effectiveness, intensifying the negative impact on users.

- Users often find it difficult to detect manipulative tactics, highlighting the importance of transparency and honesty in design practices.

- Obstructing user actions and hiding critical information can severely frustrate users and erode trust, emphasizing the need for straightforward, user-friendly solutions.

- Ethical design balances business objectives with user satisfaction, focusing on sustainable, trust-building practices rather than short-term gains.

Where did Dark Patterns originate from?

The term “dark patterns” was coined in 2010 by British UX designer Harry Brignull. Recognizing a surge in manipulative online practices, Brignull established DarkPatterns.org to document and raise awareness about these unethical design strategies. His goal was to educate both users and professionals about the prevalence of deceptive practices in digital products.

Brignull categorized various dark patterns and provided real-world examples to highlight how companies manipulate user behavior. His work brought significant attention to the ethical responsibilities of designers and the importance of transparency in user interactions. By exposing these practices, Brignull aimed to encourage designers to prioritize user welfare over short-term business gains. His efforts have led to increased scrutiny of dark patterns by regulatory bodies and sparked global conversations about digital ethics.

Navigating Dark Patterns ethically

As gatekeepers of user experience, product managers and designers play a crucial role in ensuring that digital products are developed ethically. Understanding dark patterns and committing to ethical design principles is essential for building user trust and fostering long-term relationships.

Familiarity with various dark patterns is the first step toward avoiding them. By thoroughly understanding these manipulative techniques, designers can recognize and eliminate them from their work, ensuring that they do not unintentionally harm users. Here’s an exploration of some common dark patterns, including real-world examples:

-

Hidden Costs involve extra fees or charges that are revealed only at the final stages of a transaction, catching users off guard after they’ve invested time in the purchase process. For example, an airline website might display a low ticket price initially but add taxes, baggage fees, and other surcharges during checkout. This tactic can also include pre-selected extras or add-ons presented in a way that makes users feel obliged to purchase them.

-

Trick Questions use confusing language or misleading phrasing to lead users into making choices that benefit the business. For instance, during a subscription cancellation process, a user might encounter a prompt with options like “Continue” or “Cancel,” where “Continue” means proceeding with the cancellation, and “Cancel” means aborting the process. This intentional ambiguity can result in users unknowingly maintaining a subscription they intended to cancel.

-

Scarcity Cues create a sense of urgency by indicating limited availability or time constraints, pushing users to make rushed decisions. Examples include messages like “Only 3 rooms left at this price!” or countdown timers indicating that an offer will expire soon. While sometimes based on actual stock levels, these cues are often exaggerated or fabricated to pressure users into immediate action.

-

Activity Notifications display real-time updates about other users’ actions, such as “John from New York just purchased this item!” or “15 people are viewing this product right now.” These notifications leverage social proof to encourage users to follow suit, even if the activity is fabricated or outdated.

-

Confirmshaming uses guilt-inducing language to manipulate users into opting into something. Decline options might be phrased negatively, such as “No thanks, I prefer to pay full price,” making users feel bad for opting out. This tactic is common in email subscription pop-ups on retail websites.

-

Forced Continuity occurs when a free trial ends, and the user is automatically charged without a clear reminder or an easy way to cancel the subscription. Companies might make it simple to sign up but create obstacles when users attempt to unsubscribe, such as requiring phone calls or navigating complex online processes.

-

Data Grabs involve requesting more personal information from users than is necessary for the transaction or service. For example, a simple newsletter sign-up might ask for a full name, date of birth, and address when only an email address is required. This excessive data collection can raise privacy concerns and may be used for unsolicited marketing or sold to third parties.

-

Disguised Advertisements are ads presented in a way that makes them appear as regular content or search results. Clickbait headlines or sponsored articles that mimic genuine news stories can mislead users into engaging with promotional material unwittingly.

-

False Hierarchy manipulates the visual presentation of options to nudge users toward a preferred choice. The desired option is prominently displayed with attractive colors and large fonts, while less profitable options are minimized or grayed out. For example, a software download page might highlight the paid version while making the free download link less noticeable.

-

Redirection or Nagging involves persistently prompting users to perform certain actions, such as subscribing to a newsletter or downloading an app. Pop-ups that reappear after being closed or prompts that interfere with the user’s intended task can frustrate users and impede their experience.

-

Sneak into Basket occurs when additional items are added to the user’s shopping cart without explicit consent. For instance, a travel booking site might automatically include travel insurance unless the user deselects it.

-

Roach Motel describes situations where it’s easy for users to get into a scenario (like subscribing to a service) but difficult to get out of it. Cancellation processes may be hidden, require multiple steps, or involve contacting customer service directly.

-

Privacy Zuckering tricks users into sharing more personal data than they intended. Complex privacy settings or default options that favor data sharing exploit users’ trust and cognitive biases.

-

Price Comparison Prevention makes it difficult for users to compare prices by hiding costs until checkout or using non-standard units, preventing them from making informed decisions.

-

Bait and Switch involves users believing they are performing one action, but a different, undesirable action occurs instead. For example, clicking a button labeled “Download” that actually initiates the installation of unrelated software.

-

Friend Spam happens when apps request access to a user’s contacts under the pretense of helping them connect with friends but then send spam messages to those contacts without clear consent.

These real-world examples illustrate how dark patterns can infiltrate everyday digital experiences, affecting users across various industries. Designers might not realize how damaging it can be when these patterns are layered. Using multiple dark patterns in tandem can dramatically increase their manipulative effectiveness, intensifying the negative impact on user trust and satisfaction.

Do Dark Patterns have the same effect across cultures and demographics?

Dark patterns can have varying impacts on different user demographics. Vulnerable populations, such as children, the elderly, or individuals with cognitive impairments, may be more susceptible to manipulative design tactics. For example:

- Children may lack the critical thinking skills to recognize manipulative prompts, leading to unintended in-app purchases or data sharing.

- Elderly Users might be less familiar with digital interfaces and could be easily confused by deceptive designs, potentially leading to financial loss or privacy breaches.

- Non-Native Language Speakers might misinterpret complex language or misleading terms, increasing their vulnerability to dark patterns.

- Users with Disabilities may rely on assistive technologies that don’t convey subtle manipulative cues, making it harder to recognize and avoid dark patterns.

If you aim to create inclusive and ethical user experiences for all, then make sure you have double-checked these segments.

Cultural differences can influence how users perceive and are affected by dark patterns. What might be considered manipulative in one culture could be perceived differently in another due to varying norms, expectations, and levels of digital literacy. For example:

- Perception of Authority. In some cultures, users may be more inclined to trust and comply with prompts from websites or apps, making them more susceptible to dark patterns.

- Language nuances. Misleading language or complex terms may have different effects depending on linguistic structures and cultural contexts.

Legal definitions and regulations regarding dark patterns also vary across countries. While regions like the European Union have strict laws like GDPR that address user consent and data privacy, other countries may have less stringent regulations. Designers must be aware of these variations to ensure compliance and ethical consistency across different markets.

Ethical design principles and Best Practices

Incorporating ethical design principles is not just about avoiding negative outcomes; it’s about actively fostering positive relationships with users. Working within a framework of ethical guidelines ensures that products are developed with integrity, respect, and a focus on long-term success. By committing to these principles, designers and product managers can create experiences that honor user autonomy and contribute to a trustworthy brand image.

-

Transparency and honesty are foundational to ethical design. Being upfront about intentions, terms, and conditions builds trust and reduces confusion. Providing clear and accessible information about pricing, subscriptions, and data usage ensures that users are fully informed. Avoiding the hiding of critical details until the last step is crucial, as this practice can profoundly impact user trust.

-

User control and consent empower users to make informed decisions by providing clear options and obtaining explicit consent. Recognizing that exploiting cognitive biases can manipulate consumer decisions, designers should strive to create interfaces that respect user autonomy. This means avoiding default settings that favor the company over the user’s preferences and ensuring that consent is an active, deliberate choice.

-

Simplicity and clarity in design help prevent misunderstandings and unintended actions. Using straightforward language and intuitive design elements makes it easier for users to navigate and understand the interface. Since many dark patterns go unnoticed, prioritizing transparency and simplicity is essential to avoid unintentionally deceiving users.

-

Equal choice architecture involves presenting options without bias. For example, “Accept” and “Decline” buttons should be equally prominent, ensuring users aren’t unduly influenced by design elements like color, size, or placement. This fairness respects the user’s right to choose freely and supports informed decision-making.

-

Accessibility ensures that designs are usable by all individuals, including those with disabilities. Following guidelines like the Web Content Accessibility Guidelines (WCAG) helps make products inclusive, broadening the user base and demonstrating a commitment to all users.

-

Feedback and support provide avenues for users to express concerns or seek assistance. Easy access to customer support and encouragement of feedback help identify areas where users may experience confusion or frustration, allowing for continuous improvement and strengthening the user relationship.

-

Continuous ethical review is about regularly assessing design practices to ensure alignment with ethical standards and legal requirements. Staying informed about updates in laws and industry best practices allows designers to adapt and maintain compliance, reflecting a proactive approach to ethics.

Embracing these principles will help you create meaningful, positive experiences that enhance user satisfaction and loyalty.

Legal and ethical implications

The use of dark patterns raises significant legal and ethical concerns. Regulatory bodies worldwide are increasingly cracking down on deceptive design practices, recognizing the harm they cause to consumers. Laws like the General Data Protection Regulation (GDPR) in the European Union and the California Consumer Privacy Act (CCPA) in the United States include provisions against misleading practices related to user consent and data privacy.

With growing legal risks associated with certain design practices, designers and product managers must rethink their approach to compliance and ethics, anticipating stricter enforcement. Companies have faced fines and legal action for employing dark patterns that violate consumer protection laws. For example, hiding subscription cancellations or manipulating users into sharing data can lead to significant penalties.

Industry standards and professional organizations play a crucial role in combating dark patterns. Organizations like the User Experience Professionals Association (UXPA) and the Interaction Design Association (IxDA) provide guidelines and promote best practices for ethical design. By adhering to these standards and participating in professional communities, designers can stay informed and committed to ethical practices.

There’s an increasing emphasis on protecting consumer rights online, with regulators focusing on transparency, fair treatment, and honesty in digital interactions. Understanding these legal implications is crucial for avoiding potential lawsuits and financial penalties. Designers must stay informed about current laws and anticipate future regulations to ensure their practices remain compliant.

Ethically, employing dark patterns undermines the trust between users and companies. It contradicts the principles of user-centered design and can damage a brand’s reputation. Users expect honesty and fairness in their interactions, and deceptive practices can lead to long-term negative perceptions.

The importance of building trust

Building and maintaining user trust is vital for the long-term success of any product or service. Ethical design plays a central role in fostering this trust. When users feel respected and confident that a company has their best interests in mind, they are more likely to remain loyal customers.

Dark patterns can erode long-term customer loyalty. By focusing on sustainable, trust-building practices rather than short-term gains, designers can foster lasting relationships with users. Trustworthy practices encourage users to continue using a product and recommend it to others, enhancing the company’s reputation in the marketplace.

Companies known for ethical practices are more likely to attract and retain customers, as well as top talent who value integrity. Ethical designs lower the risk of legal issues, penalties, and public backlash, safeguarding the company’s future. Respecting users leads to more satisfying interactions, enhancing overall user experience and encouraging positive word-of-mouth.

In an increasingly competitive market, trust becomes a differentiator. Users have many options, and they are more likely to choose brands that demonstrate transparency, honesty, and respect. By committing to ethical design, companies invest in their reputation and customer relationships, which are essential assets for long-term success.

Where is the line between persuasive design and manipulative Dark Patterns?

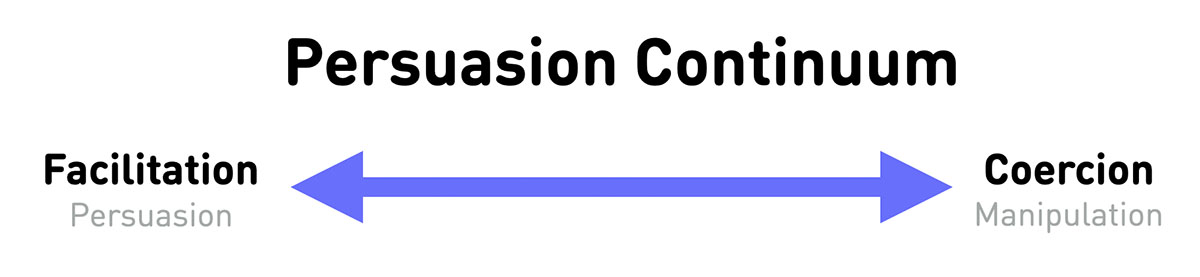

The distinction between persuasive design and manipulative dark patterns lies in the intention and the user’s autonomy. Persuasive design aims to guide users toward beneficial actions while respecting their ability to make informed choices. It leverages psychological principles to enhance user experience without deception.

For example, encouraging users to complete a profile by highlighting the benefits they will receive is a form of persuasive design. It informs and motivates without misleading.

In contrast, dark patterns manipulate users into actions that benefit the business at the user’s expense, often through deception or by exploiting cognitive biases unethically. The key differences include:

- Transparency. Persuasive design is transparent about intentions, while dark patterns obscure or mislead.

- User benefit. Persuasive design focuses on mutual benefit, whereas dark patterns prioritize business gains over user welfare.

- Respect for autonomy. Persuasive design respects user choices, while dark patterns undermine autonomy.

Understanding this distinction helps designers make ethical choices that enhance user experience without crossing into manipulation.

How do I find out if I’m applying Dark Patterns?

Auditing your design for dark patterns is an essential step toward ethical practice. Here’s how to identify and eliminate them:

-

Review design elements critically. Examine your interface for any elements that might confuse or mislead users. Look for complex language, hidden options, or default settings that favor the company.

-

Map user journeys. Analyze the steps users take to complete tasks. Identify any unnecessary obstacles or friction that might hinder actions like canceling a subscription.

-

Seek external feedback. Conduct usability testing with a diverse group of users. Their feedback can reveal areas where the design might be unintentionally manipulative.

-

Consult ethical guidelines. Refer to industry standards and guidelines provided by professional organizations. Compare your design against these benchmarks.

-

Engage in ethical training. Invest in training for your team on ethical design principles. Awareness and education are key to recognizing and avoiding dark patterns.

-

Stay informed about laws and regulations. Ensure that your design complies with relevant laws like GDPR and CCPA. Legal compliance often aligns with ethical practice.

-

Use tools and resources. Utilize checklists, frameworks, and tools designed to identify dark patterns. Resources like the Dark Patterns Tip Line can provide insights.

By systematically evaluating your design, you can uncover and address any dark patterns, aligning your product with ethical standards.

Companies may justify the use of dark patterns by citing business pressures, competitive markets, or the pursuit of growth metrics like user engagement or revenue. Common justifications include:

- Short-term gains. Belief that dark patterns boost immediate conversions or sales.

- Competitive advantage. Perception that competitors use similar tactics, necessitating their use to stay relevant.

- User engagement metrics. Focus on metrics without considering the quality of user experience.

These justifications can be challenged by emphasizing the long-term negative consequences:

- Erosion of trust. Dark patterns damage user trust, leading to decreased loyalty and negative word-of-mouth.

- Legal risks. Regulatory penalties and lawsuits can result from deceptive practices, outweighing short-term gains.

- Brand reputation. Public exposure of manipulative tactics can harm the brand’s image, affecting customer acquisition and retention.

- Employee morale. Ethical lapses can impact team morale and make it harder to attract top talent who value integrity.

By presenting data and case studies that illustrate these consequences, stakeholders can be persuaded to prioritize ethical design. Emphasizing the benefits of user trust, compliance, and sustainable growth supports a shift away from dark patterns.

With AI-driven personalization and mobile usage on the rise, designers need to be aware of new forms of dark patterns that could emerge in these contexts. AI algorithms might inadvertently create manipulative experiences, such as personalized price discrimination or overly persuasive recommendations. Mobile platforms may introduce subtle manipulations through notifications or app permissions.

Focusing on forward-thinking ethical design involves anticipating these developments. Designers must stay ahead of potential ethical challenges posed by new technologies and ensure that design practices evolve accordingly. By proactively addressing ethical concerns, designers can challenge current practices and commit to ongoing education and adaptation.

Frequently Asked Questions (FAQ)

Q: What is a dark pattern?

A: A dark pattern is a deceptive design technique used in user interfaces to manipulate users into taking actions they might not have intended. These manipulative practices exploit psychological biases and are crafted to benefit the business at the user’s expense, often leading to unintended purchases, unwanted subscriptions, or inadvertent sharing of personal information.

Q: What is the new name for dark patterns?

A: Dark patterns are increasingly being referred to as “deceptive patterns.” This shift emphasizes the unethical nature of these design practices and aligns with regulatory language used in laws and guidelines aimed at protecting consumers from manipulative interfaces.

Q: What is the danger of dark patterns?

A: The dangers of dark patterns include erosion of user trust, negative user experiences, and potential legal repercussions for businesses. They can lead to unintended financial commitments, privacy breaches, and frustration among users. For businesses, employing dark patterns can result in damaged reputations, loss of customer loyalty, and penalties under consumer protection laws.

Q: What is an example of a dark pattern website?

A: An example of a website using dark patterns might be one that adds additional products to your shopping cart without clear consent (Sneak into Basket), or a subscription service that makes it intentionally difficult to cancel (Roach Motel). Specific company names are often discussed in articles and forums highlighting dark patterns, but it’s important to evaluate each site individually and note that practices may change over time.

Q: What is the problem with dark patterns?

A: The primary problem with dark patterns is that they undermine user autonomy and trust. By manipulating users into actions they did not intend, businesses prioritize short-term gains over ethical considerations and long-term relationships. This can lead to user frustration, decreased satisfaction, and can harm a company’s reputation and legal standing.

Q: Are dark patterns ethical?

A: No, dark patterns are considered unethical because they involve deception and manipulation. They violate principles of honest communication and respect for user autonomy. Ethical design practices prioritize transparency, user control, and fairness, fostering trust and positive relationships between users and businesses.

Q: How common are dark patterns?

A: Dark patterns are unfortunately quite common across various industries and platforms. Studies and surveys have found that many popular websites and apps employ at least one type of dark pattern. The prevalence underscores the importance of awareness among users and a commitment to ethical design among professionals.

Q: What is the opposite of dark patterns?

A: The opposite of dark patterns is “ethical design” or “user-centered design.” These approaches focus on creating interfaces that are transparent, fair, and prioritize the user’s needs and intentions. Ethical design respects user autonomy, provides clear information, and avoids manipulative tactics.

Q: Why do dark patterns exist?

A: Dark patterns exist because they can produce short-term gains for businesses, such as increased sales, subscriptions, or user engagement. They may arise from competitive pressures, a focus on certain metrics, or a lack of awareness about ethical considerations. However, these gains often come at the expense of user trust and long-term success.

Q: What Are Common Misconceptions About Dark Patterns?

A: Common misconceptions include believing that dark patterns are just aggressive marketing tactics or that they are acceptable if they lead to business growth. Some may think that users can easily detect and avoid them, minimizing their impact. In reality, dark patterns are deceptive practices that erode trust, can lead to legal issues, and often go unnoticed by users due to their subtle implementation.

Q: How Can Collaboration Between Stakeholders Improve Ethical Design Practices?

A: Collaboration between designers, developers, legal teams, marketers, and user advocates is essential for promoting ethical design. By working together, stakeholders can align on ethical standards, ensure compliance with regulations, and create user-centered products. Open communication and shared responsibility help in identifying potential ethical issues early in the design process.

Examples

In 2015, LinkedIn faced a class-action lawsuit over its “Add Connections” feature. Users who signed up were prompted to import their email contacts, and LinkedIn sent repeated email invitations to those contacts without clear consent from the user—a practice known as “Friend Spam. LinkedIn settled the lawsuit for $13 million and adjusted its practices to provide clearer consent options.

Amazon

Amazon has been criticized for making it difficult to cancel Amazon Prime subscriptions, exemplifying the “Roach Motel” dark pattern. The cancellation process involved multiple steps and was not straightforward. Additionally, Amazon has been accused of “Hidden Costs” by adding items like insurance or subscription services to carts by default, requiring users to opt out manually. Users found themselves subscribed to services they didn’t intend to join or faced unexpected charges at checkout. Under pressure from consumer rights groups and regulators, Amazon has made efforts to simplify the cancellation process and improve transparency in the checkout process.

Facebook has faced scrutiny for its complex privacy settings, which some argue are designed to encourage users to share more personal information than they might intend—a practice dubbed “Privacy Zuckering.” Users may inadvertently share personal data publicly, leading to privacy concerns and potential misuse of their information. Following regulatory pressures and public outcry, Facebook has periodically updated its privacy settings to be more transparent, though criticisms remain.

Booking.com

Booking.com frequently uses “Scarcity Cues” like “Only 1 room left!” and “15 people are viewing this hotel” to create a sense of urgency. While intended to inform, these messages can pressure users into making hasty decisions. Users may feel rushed into booking accommodations without fully considering their options, potentially leading to regret or dissatisfaction. European regulators have investigated such practices, leading Booking.com to commit to making these messages clearer and ensuring they reflect real-time data.

Ryanair

Ryanair has been known to add extras like travel insurance or priority boarding to customer bookings by default, requiring users to deselect them manually—examples of “Sneak into Basket” and “Hidden Costs.” Customers may end up paying for additional services they did not want or realize they had selected. After consumer complaints and regulatory attention, Ryanair adjusted its booking process to make optional extras clearer and require active selection by the user.

Microsoft Windows 10

During the rollout of Windows 10, Microsoft was criticized for aggressive upgrade prompts. Clicking the “X” to close the upgrade window sometimes initiated the upgrade instead— a “Bait and Switch” tactic. Persistent reminders exemplified “Nagging.” Users unintentionally upgraded their operating systems, leading to compatibility issues and frustration. Microsoft faced backlash and eventually changed the prompts to respect user intentions more accurately.

-

Are we exploiting cognitive biases unethically?

Hint Are we aware of how cognitive biases like default bias, scarcity heuristic, or social proof influence user decisions, and are we using this knowledge responsibly? -

Are all options clearly presented?

Hint Have we made sure that users can easily find and understand all available choices without being unduly influenced? -

Is the language clear and unambiguous?

Hint Are we using straightforward language, avoiding double negatives or confusing terms? -

Are we respecting user autonomy?

Hint Do users have real control over their decisions, without being manipulated by design elements? -

Have we obtained explicit consent?

Hint Are we clearly asking for permission, avoiding pre-ticked boxes or default opt-ins? -

Is it easy to reverse decisions?

Hint Can users easily unsubscribe, cancel services, or change settings without facing unnecessary obstacles? Are we making sure not to obstruct user actions? -

Are we hiding critical information?

Hint Have we provided all important details upfront, avoiding the practice of hiding information until the last step? -

Are we prioritizing long-term trust over short-term gains?

Hint Are our design choices focused on building lasting relationships, rather than exploiting users for immediate benefits? -

Would we feel comfortable explaining our design choices publicly?

Hint If our design practices were made public, would we be proud of them? -

Are we complying with legal standards?

Hint Have we ensured that our design complies with laws and regulations regarding consumer rights and data protection? Are we prepared for stricter enforcement? -

Have we considered future trends?

Hint Are we aware of how emerging technologies like AI and mobile platforms might introduce new ethical challenges, and are we prepared to address these proactively?

You might also be interested in reading up on:

- Harry Brignull @harrybr

- Tristan Harris @tristanharris

- Deceptive Patterns @darkpatterns

- Deceptive Patterns: Exposing the tricks tech companies use to control you by Harry Brignull (2023)

- Hooked: How to Build HabitForming Products by Nir Eyal (2014)

- Badass: Making Users Awesome by Kathy Sierra (2015)

- Dark Patterns by Harry Brignull

- Deceptive Design Patterns by Harry Brignull